Google Gemini AI 1.0

Google’s latest AI model, Gemini 1.0, marks a significant advancement in the realm of artificial intelligence. Introduced at Google’s annual I/O developers’ conference and now integrated into Bard, Gemini stands out due to its multimodal capabilities. This means it can understand and utilize data from a variety of sources, including text, code, audio, images, and video, challenging the dominance of other generative AI systems like ChatGPT.

Gemini 1.0 is designed to process and analyze multiple forms of sensory input simultaneously, much like the human brain. This ability to integrate data from different sources allows Gemini to have a more comprehensive understanding of the data it processes.

Gemini’s Different Versions and Their Applications

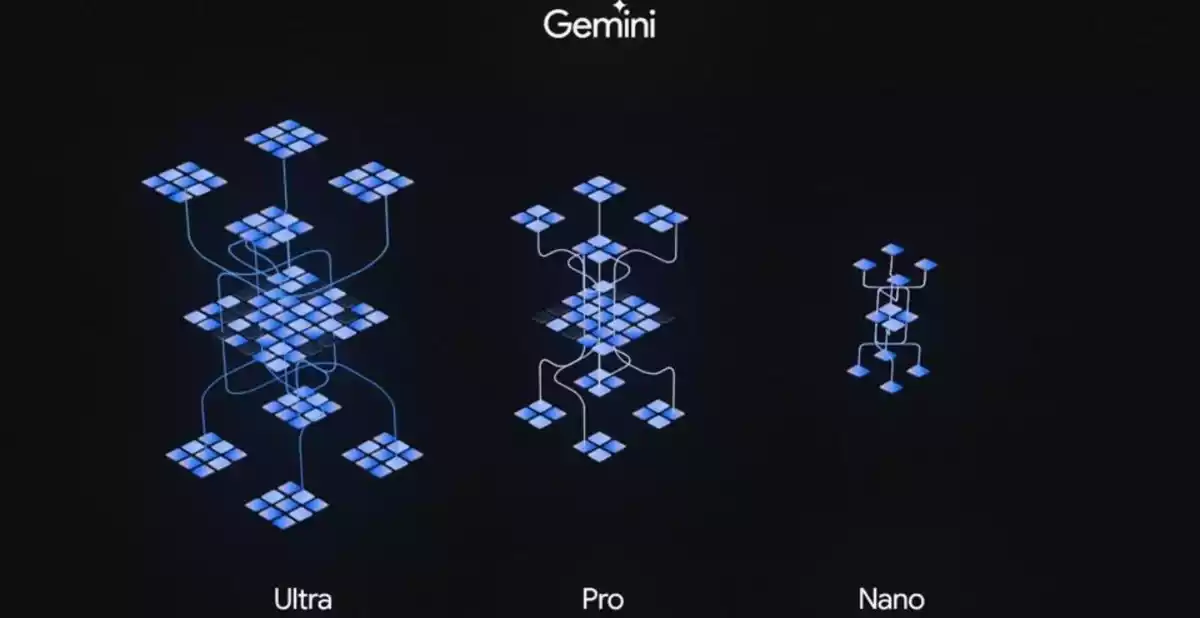

Google has optimized Gemini 1.0 into three distinct versions to cater to a range of tasks:

- Gemini Ultra: This is the largest and most capable version, optimized for complex tasks like advanced reasoning, coding, and solving mathematical problems. Gemini Ultra will be available to select customers, developers, partners, and safety and responsibility experts for early testing and feedback. Additionally, Google plans to launch “Bard Advanced,” giving users access to Gemini Ultra’s capabilities.

- Gemini Pro: Suitable for a variety of tasks, Gemini Pro is available to developers and enterprise customers through the Gemini API in Google AI Studio or Google Cloud Vertex AI. It’s also being integrated into the current version of Bard in over 170 regions.

- Gemini Nano: Optimized for on-device AI tasks, Gemini Nano can run offline on Android-based smartphones and other devices. It’s being rolled out in Google’s Pixel 8 Pro smartphone and powers features like Summarize in the Recorder app and Smart Reply for Gboard, with plans to expand to more messaging apps.

| Version | Description |

|---|---|

| Gemini Ultra | Largest, most capable model for complex tasks. Available for early testing and feedback. |

| Gemini Pro | Suitable for various tasks, integrated into Bard and available via Google Cloud Vertex AI. |

| Gemini Nano | Optimized for on-device tasks, currently powers features in Pixel 8 Pro and Gboard. |

Gemini AI Unique Features

The distinctiveness of Gemini lies in its multimodal capacity, allowing it to handle a wide variety of data types seamlessly, such as text, code, audio, images, and video. This makes Gemini the most flexible model Google has delivered, available in different “sizes” to suit various applications.

Integration and Availability

Gemini is already integrated into the Bard chatbot and is available in over 170 countries and territories. It is also part of the Pixel 8 OS, aiding users with suggested messages. Starting December 13, developers will access Gemini through the Google Cloud API, and further integrations into products like Search, Ads, Chrome, and Duet AI are expected in the coming months.

Future Developments

In 2024, Google plans to roll out Gemini Ultra after completing extensive trust and safety checks. This version will feature enhanced functionality, including reinforcement learning from human feedback (RLHF). Select developers and experts will have early access to provide feedback. Bard Advanced, incorporating the latest models including Ultra, is also set to be introduced in 2024.

Google Gemini AI represents a significant leap in AI technology, offering a multimodal, flexible, and scalable solution that can integrate various data types. Its different versions cater to a range of applications, from complex reasoning tasks to on-device AI functionalities. The integration of Gemini into Google products like Bard and Pixel smartphones, along with its upcoming developments, positions Google at the forefront of AI innovation. As Gemini continues to evolve, it is poised to become an integral part of Google’s AI ecosystem, furthering the capabilities and reach of artificial intelligence in various sectors.